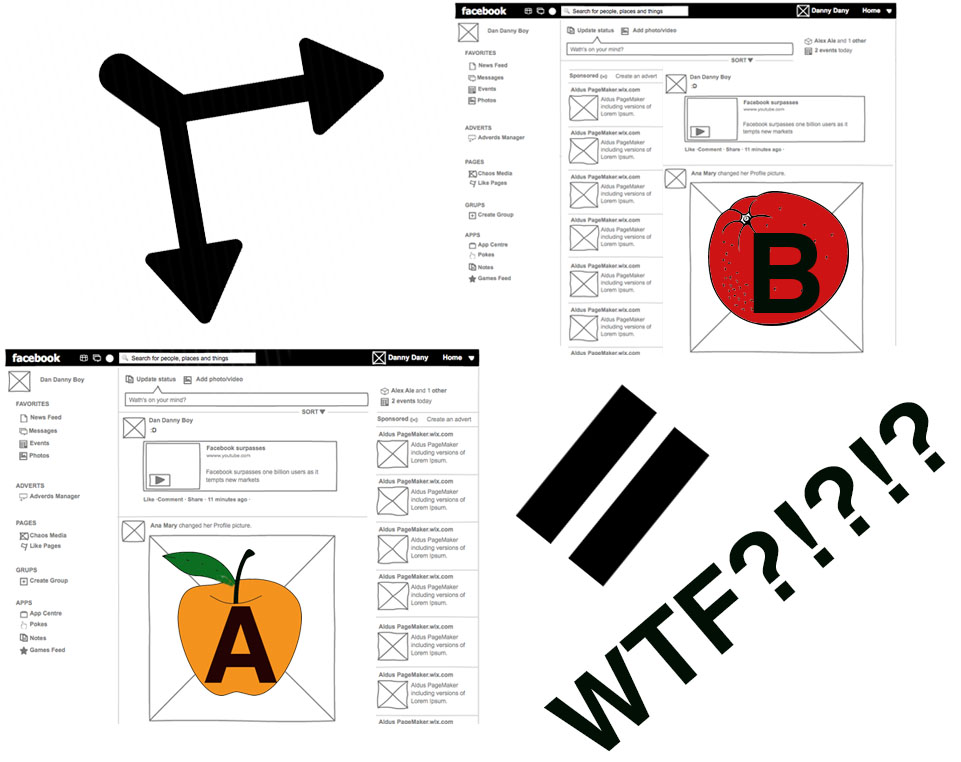

Testing, yeah we do that, been doing that for a couple of years, A/B testing is at the core of what we do mate. I can’t get enough of it.I tend to get told this alot, but then when I ask a few more questions I get a common scene. Allow me set that scene. You are part of a design review session and there is a disagreement: the HiPPO and a member of the team do not agree on the particular element of a design, one person believes in one thing where the other believes in another. You interrupt with “We can A/B test that when its live”, every one does that little nod to say “Yeah ok let’s do that, then we will see who is right”. Everyone is still friends and somewhere later down the line you and everyone else forgets what the disagreement was about and in truth forgets to do the test. Sadly, this is quite normal in a lot of companies.

A/B testing is the big capability most digital organisations are desperately trying to acquire. If you look at most job descriptions towards the back end of last year for mid to senior product roles, you will see something along the lines of “knowledge of A/B testing”, usually listed under essential requirements. There is a reason for that: most digital organisations are either not doing it and need someone to set them up or they are doing it but they really haven’t got a clue about how to use it in a meaningful way. But, A/B testing when used appropriately can be a great, lean, way to establish optimisation. It can help establish tone of wording, attractiveness of a design and general user journey. But what can also happen is the other end of the spectrum where you have a bunch of unanswered questions attached to a now inconsistent service where nobody knows who is testing what and for why, leaving you with a Frankenstein monster.

Here are some tips to consider when establishing or re-jigging your A/B testing empire.

Test that your testing software actually works: Look at the end goal

If your A/B test says you have a winner, look at the tangible end result of the test. If you did a change to see if said layout would increase consumption then actually check the consumption numbers. There is a lot of testing software claiming to test multiple things via many avenues to get that “true north” but in reality only a handful of those metrics might actually be working, as there maybe external factors outside of the software in your site that can lead to the software producing false positives.

Do not run tons of tests on the same thing at the same time

Keep it simple, I have seen an organisation run 15 different variations of a headline and they were surprised there wasn’t a clear winner. Of course there wouldn’t be, unless the other 14 headlines where “if you are an idiot click here” it will always be too close to call.

Once you have your results, do something with them

It’s all well and good testing something but make sure interested parties actually see the results. There is nothing worse than hearing about a test you commissioned long after the fact and no action was taken.

Small tests, often

Don’t fall into the trap of launching full redesigns to a small percentage of your audience over a lengthy process. Yes you might have a clear winner, but you won’t learn why and what makes it a winner. Do things small, quick and nimble. Use the results effectively and keep those results as a “Lessons learned”, which leads me to my next point.

Keep a record of what worked and what didn’t work

Share with other teams! There is nothing worse than a site performing the same tests in isolation. It wastes time, resource, and slows everything down. I am not saying never test anything anyone has done before, but make an informed decision and you can only make an informed decision with all the information available to you.

Focus your efforts

A/B tests will not tell you why a falling part of your service is failing. A/B tests are to be used to help define what optimisation should be for the test subject based on the testing environment you have set out. So, focus your efforts on just that, the big “oh s**t, we need to look at this ASAP” stuff requires a lot more than A/B testing to put right. Focus on optimising with A/B testing and nothing else.

Have a test plan

Have a log of all the things you want to test, treat them like a little backlog filled with problem statements. Establish a process where for a test to be conducted you need: a problem statement, a benchmark, two or more test subjects, a predefined testing period, a measure of success etc. Once you have a basic framework of what should be in a test case you can prioritise and list them out in your plan